Environmental Sensors Data

Sensor.Community is a contributors-driven global sensor network that creates Open Environmental Data. The data is collected from sensors all over the globe. Anyone can purchase a sensor and place it wherever they like. The APIs to download the data is in GitHub and the data is freely available under the Database Contents License (DbCL).

The dataset has over 20 billion records, so be careful just copying-and-pasting the commands below unless your resources can handle that type of volume. The commands below were executed on a Production instance of ClickHouse Cloud.

- The data is in S3, so we can use the

s3table function to create a table from the files. We can also query the data in place. Let's look at a few rows before attempting to insert it into ClickHouse:

The data is in CSV files but uses a semi-colon for the delimiter. The rows look like:

- We will use the following

MergeTreetable to store the data in ClickHouse:

- ClickHouse Cloud services have a cluster named

default. We will use thes3Clustertable function, which reads S3 files in parallel from the nodes in your cluster. (If you do not have a cluster, just use thes3function and remove the cluster name.)

This query will take a while - it's about 1.67T of data uncompressed:

Here is the response - showing the number of rows and the speed of processing. It is input at a rate of over 6M rows per second!

- Let's see how much storage disk is needed for the

sensorstable:

The 1.67T is compressed down to 310 GiB, and there are 20.69 billion rows:

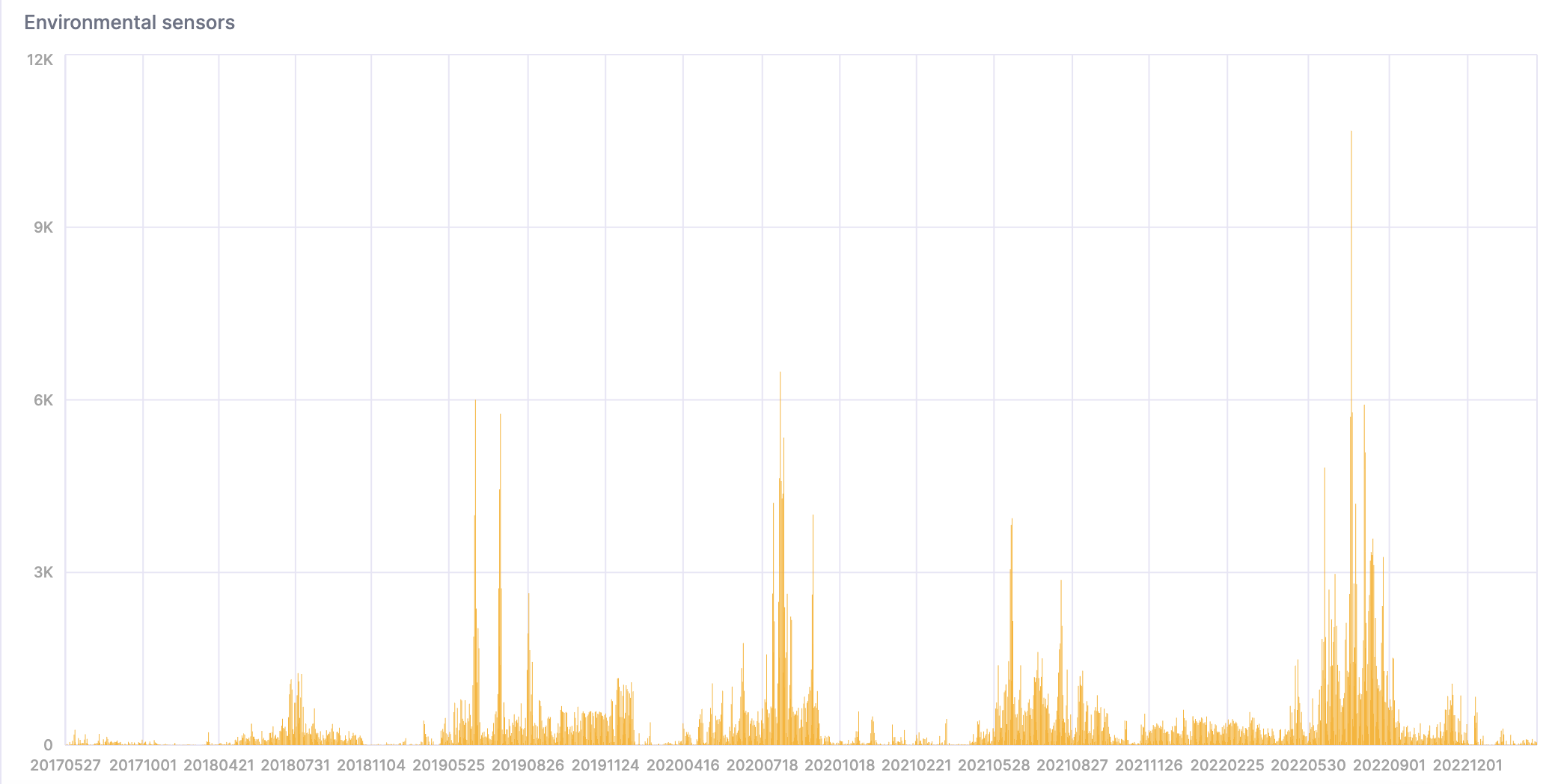

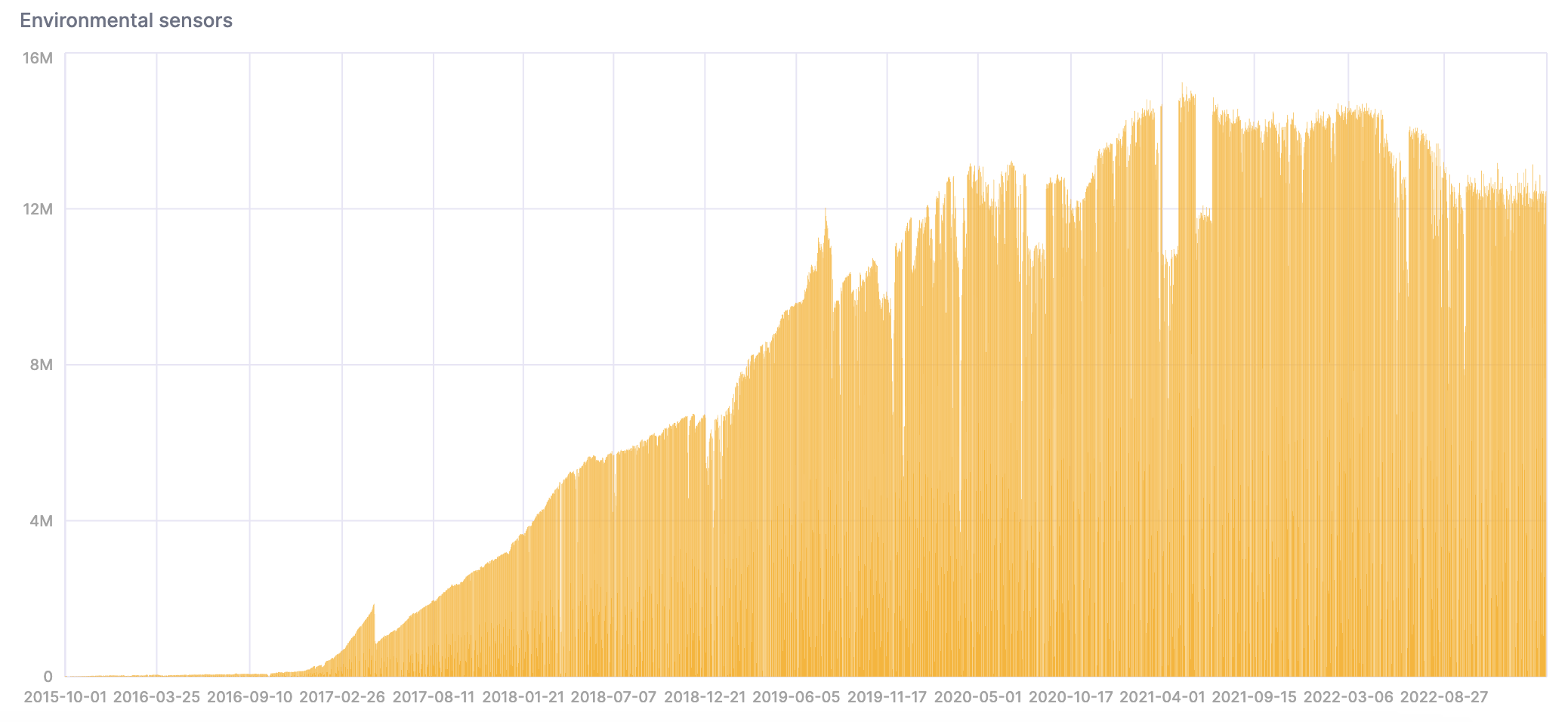

- Let's analyze the data now that it's in ClickHouse. Notice the quantity of data increases over time as more sensors are deployed:

We can create a chart in the SQL Console to visualize the results:

- This query counts the number of overly hot and humid days:

Here's a visualization of the result: